CNNs - How it all goes together

The idea of this post is to figure out how each part of a convolutional neural network works.

from fastai import *

from fastai.vision import *

Fetching our images

The list below describes all the images in our dataset. We’re working with a small sample dataset to illustrate what happens inside the CNN.

DATA_PATH = Path('/home/sidravic/downloaded_images/internal_v4_subset')

DATA_PATH.ls()

[PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1bae302b-a651-40b8-99e7-3b18b0ee30fc'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1bab59ec-f857-41e2-9bf4-a0973798f165'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/01c7c62c-10b0-4e07-a0f1-b450334328d0'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0a16fe41-155b-41b7-b88e-93e49aee1ea8'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1e7eab0f-1a95-44d4-829a-d0394ba33a8a'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1a3ac4a0-ae97-4041-857e-f71c79b2d7ad'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/4b060e46-ebba-432a-8ce2-2aa3d9c52c9d'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/2a116698-b1ab-4be2-b116-e07fd4c269b5'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/4a683c8d-396c-421e-8f42-5ffe71b27425'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0fa6b628-52d1-48e9-8963-0043234563ff'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1d271822-7e90-41bc-b1fe-c73c186c370c'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1fe7cf28-2f7b-4f84-9018-d01f0c3a5d81'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/3f80a18a-b301-4263-8056-96b6c3ac02e1'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1b222822-93ce-4d66-ae1e-933a9948917b'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0f3e7823-8dce-4c36-9950-a78c5fb6624e'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1b8cf671-d44f-48e6-a0ae-7070ceb5a18f'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0b9af36c-f808-43eb-9818-3c385b1ac711'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/04ed7319-7887-4323-92f7-f37b78d8c4eb'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/4c62dd41-907a-41d8-94fb-fc726f3aa62e'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/2b5dcdbc-05f2-40c5-b794-72be0afd073b'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/3b6529f3-4d3b-48a9-a2ae-754483ca166a'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1b86424c-b6f1-4848-a67d-639e99ea6f57'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/2c73666a-f7e1-4265-85a8-ec0c9cf48b7e'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1f1a8f35-63ec-484f-8e39-44cb227d08d2'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/2fb2b8bb-9d6e-4f15-8eec-ffcb45b5a41c'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/4b5ef02f-b8cd-4f6c-91ac-ed182dc5a672'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0b97f4b7-11de-48c7-95da-b9958370a0d8'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/2cd4c9ef-0b5a-4d88-bfef-f81acb980cdb'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0a2adcca-a017-4a79-8b1f-304f0c56603a'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/4dfdac1a-1788-4e0a-9aad-74672b92311c'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/4aebe856-6250-4b46-9daf-1c10a462c455'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/3f02bd7e-88c5-4d0b-bfc1-47ae5de9b55a'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/04ded73b-eaad-430d-8a1a-ad1156a446ba'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0f6afe3a-42eb-49fb-b9e8-36a5748650e3'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1a21cbc0-44c8-4581-bc3f-0f8b32292864'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0eeaca3f-aba9-4d0d-a416-3470b9f89853'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0ea8c046-e637-4abd-9f19-9c4c403f997c'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/1e64c72d-5935-439f-8f50-918cb34fa2cd'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/2b4e90f5-d2c5-4058-825b-96d636d841e9'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/2a32f2bf-6c1c-47ca-a4eb-e0f95d7bf583'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/3a11d898-f5a2-4e72-9b21-58e9a91d82b2'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0e177daa-4ad1-4d36-b4e5-26b322315d0a'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0f68b0c9-802e-4698-bdb2-634bdb5d7211'),

PosixPath('/home/sidravic/downloaded_images/internal_v4_subset/0f8f52d8-b78f-4904-b5e1-3e65136209b0')]

About our dataset

We’re simply using the unique product ids to classify each of our products. The product ids could map to any unique identifier used in the system to identify the product. We could even replace them with the name of the product as long as it’s unique

transforms = get_transforms(do_flip=True, max_zoom=1.2, max_rotate=10.0)

data = ImageDataBunch.from_folder(DATA_PATH,

train=".",

valid_pct=0.2,

ds_tfms=transforms, size=300, num_workers=1).normalize(imagenet_stats)

- We’ve loaded the data as a

DataBunchwhich is one way of doing it but depending on the underlying library you’re using this may vary. - How we do this is irrelevant to what we’re aiming to understand which is how the different layers of a CNN actually fit together.

- Briefly, the

get_transformsmethod tells the image loader to apply the listed transforms to each image randomly. This means that when the CNN begins to work through the images in batches of 16 it’ll see image with these transformations applied at random. - Transformations prepare the model for the noise and distortions that are commonly visible in the input data. Input data here refers to the data that’s passed to the model for predictions once the model is trained.

- The

ImageDataBunchloads the image from a folder specified by theDATA_PATH. The other parametersvalid_pctsuggest that we use a 20% of the data for validation. We resize the images to 300x300. We then normalize the image toimagenet_stats. We use imagenet_stats purely because the we intend to train this model on top of resnet50 which was built using imagenet and normalising the parameters to using imagenet values allows it to perform well. In the case we’re not using transfer learning this may not be relevant.

imagenet_stats

([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

The classes for dataset is the product ids that we referred to earlier. It’s just a bunch of product IDs in our system. This could be the product names

data.classes[:5]

['01c7c62c-10b0-4e07-a0f1-b450334328d0',

'04ded73b-eaad-430d-8a1a-ad1156a446ba',

'04ed7319-7887-4323-92f7-f37b78d8c4eb',

'0a16fe41-155b-41b7-b88e-93e49aee1ea8',

'0a2adcca-a017-4a79-8b1f-304f0c56603a']

data.show_batch(rows=2, figsize=(8, 8))

So these are the images we’re working with and the corressponding product ids show up next to them

learner = cnn_learner(data, models.resnet50, metrics=error_rate)

Downloading: "https://download.pytorch.org/models/resnet50-19c8e357.pth" to /home/sidravic/snap/code/common/.cache/torch/checkpoints/resnet50-19c8e357.pth

100%|██████████| 97.8M/97.8M [01:08<00:00, 1.50MB/s]

How our data looks inside

data.train_ds.x, data.train_ds.y

(ImageList (336 items)

Image (3, 225, 225),Image (3, 225, 225),Image (3, 255, 197),Image (3, 275, 183),Image (3, 225, 225)

Path: /home/sidravic/downloaded_images/internal_v4_subset,

CategoryList (336 items)

1bae302b-a651-40b8-99e7-3b18b0ee30fc,1bae302b-a651-40b8-99e7-3b18b0ee30fc,1bae302b-a651-40b8-99e7-3b18b0ee30fc,1bae302b-a651-40b8-99e7-3b18b0ee30fc,1bae302b-a651-40b8-99e7-3b18b0ee30fc

Path: /home/sidravic/downloaded_images/internal_v4_subset)

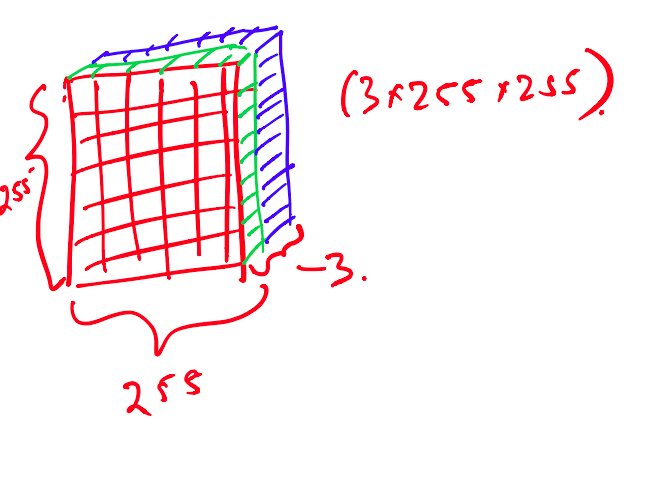

- Our dataset is set of images arranged a rank 3 tensor (imagine a matrix) of size $3x225x255$.

- The 3 in the $(3x225x225)$ indicates the RGB layers for each image for a color image.

- That’s how a single is stored. Here we assume the image is $3x255x255$ instead of $3x225x225$ that the normalisation due imagenet_stats reshaped it to

print( data.train_ds[15])

data.train_ds.x[15]

(Image (3, 300, 300), Category 01c7c62c-10b0-4e07-a0f1-b450334328d0)

data.one_batch()

(tensor([[[[0.4876, 0.4852, 0.4836, ..., 0.2380, 0.2366, 0.2337],

[0.4979, 0.4958, 0.4943, ..., 0.2383, 0.2377, 0.2344],

[0.5081, 0.5065, 0.5049, ..., 0.2393, 0.2390, 0.2352],

...,

[0.4722, 0.4788, 0.4831, ..., 0.5315, 0.5049, 0.4754],

[0.4725, 0.4775, 0.4829, ..., 0.5242, 0.4992, 0.4863],

[0.4885, 0.4899, 0.4879, ..., 0.5265, 0.5096, 0.5008]],

[[0.4980, 0.4960, 0.4949, ..., 0.2508, 0.2494, 0.2465],

[0.5052, 0.5036, 0.5025, ..., 0.2511, 0.2505, 0.2472],

[0.5123, 0.5112, 0.5101, ..., 0.2520, 0.2518, 0.2480],

...,

[0.4482, 0.4549, 0.4592, ..., 0.5326, 0.5049, 0.4754],

[0.4501, 0.4536, 0.4590, ..., 0.5247, 0.4992, 0.4863],

[0.4771, 0.4769, 0.4732, ..., 0.5265, 0.5096, 0.5008]],

[[0.5177, 0.5157, 0.5146, ..., 0.2804, 0.2791, 0.2762],

[0.5248, 0.5233, 0.5222, ..., 0.2808, 0.2801, 0.2769],

[0.5319, 0.5308, 0.5297, ..., 0.2817, 0.2815, 0.2776],

...,

[0.4482, 0.4549, 0.4592, ..., 0.5596, 0.5362, 0.5070],

[0.4520, 0.4536, 0.4590, ..., 0.5540, 0.5306, 0.5179],

[0.4926, 0.4904, 0.4834, ..., 0.5577, 0.5409, 0.5322]]],

[[[0.8574, 0.8574, 0.8574, ..., 0.7801, 0.8152, 0.8411],

[0.8574, 0.8574, 0.8574, ..., 0.7928, 0.8507, 0.9212],

[0.8574, 0.8574, 0.8574, ..., 0.8152, 0.9071, 0.9513],

...,

[0.8153, 0.8126, 0.8124, ..., 0.8075, 0.8075, 0.8075],

[0.8159, 0.8132, 0.8124, ..., 0.8075, 0.8075, 0.8075],

[0.8165, 0.8138, 0.8124, ..., 0.8075, 0.8075, 0.8075]],

[[0.8523, 0.8523, 0.8523, ..., 0.7484, 0.7849, 0.8025],

[0.8523, 0.8523, 0.8523, ..., 0.6760, 0.7119, 0.7549],

[0.8523, 0.8523, 0.8523, ..., 0.6546, 0.7242, 0.7560],

...,

[0.8153, 0.8126, 0.8124, ..., 0.8026, 0.8026, 0.8026],

[0.8159, 0.8132, 0.8124, ..., 0.8026, 0.8026, 0.8026],

[0.8165, 0.8138, 0.8124, ..., 0.8026, 0.8026, 0.8026]],

[[0.8423, 0.8423, 0.8423, ..., 0.5334, 0.5887, 0.6233],

[0.8423, 0.8423, 0.8423, ..., 0.4972, 0.5447, 0.5887],

[0.8423, 0.8423, 0.8423, ..., 0.4817, 0.5526, 0.5807],

...,

[0.8153, 0.8126, 0.8124, ..., 0.7928, 0.7928, 0.7928],

[0.8159, 0.8132, 0.8124, ..., 0.7928, 0.7928, 0.7928],

[0.8165, 0.8138, 0.8124, ..., 0.7928, 0.7928, 0.7928]]],

[[[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

...,

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000]],

[[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

...,

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000]],

[[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

...,

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000]]],

...,

[[[0.6490, 0.6403, 0.6375, ..., 0.8703, 0.8683, 0.8672],

[0.6555, 0.6455, 0.6394, ..., 0.8664, 0.8675, 0.8672],

[0.6553, 0.6475, 0.6334, ..., 0.8674, 0.8677, 0.8672],

...,

[0.8975, 0.9015, 0.8997, ..., 0.6015, 0.3193, 0.2780],

[0.8978, 0.8972, 0.8987, ..., 0.3883, 0.3401, 0.2856],

[0.8925, 0.8923, 0.8915, ..., 0.7867, 0.6211, 0.4140]],

[[0.4022, 0.3946, 0.3977, ..., 0.8553, 0.8532, 0.8521],

[0.4089, 0.4017, 0.4066, ..., 0.8512, 0.8524, 0.8521],

[0.4087, 0.4070, 0.4068, ..., 0.8522, 0.8526, 0.8521],

...,

[0.8797, 0.8838, 0.8820, ..., 0.3618, 0.1198, 0.1324],

[0.8799, 0.8793, 0.8809, ..., 0.1858, 0.1492, 0.1200],

[0.8744, 0.8743, 0.8734, ..., 0.4944, 0.3676, 0.2294]],

[[0.3364, 0.3285, 0.3296, ..., 0.8438, 0.8417, 0.8405],

[0.3430, 0.3349, 0.3361, ..., 0.8396, 0.8408, 0.8405],

[0.3428, 0.3387, 0.3332, ..., 0.8407, 0.8411, 0.8405],

...,

[0.8655, 0.8700, 0.8685, ..., 0.3365, 0.1044, 0.1027],

[0.8652, 0.8646, 0.8662, ..., 0.1660, 0.1404, 0.0928],

[0.8595, 0.8593, 0.8585, ..., 0.3903, 0.2755, 0.1885]]],

[[[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

...,

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000]],

[[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

...,

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000]],

[[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

...,

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000],

[1.0000, 1.0000, 1.0000, ..., 1.0000, 1.0000, 1.0000]]],

[[[0.6817, 0.6822, 0.6825, ..., 0.8097, 0.8100, 0.8096],

[0.6824, 0.6824, 0.6845, ..., 0.8118, 0.8118, 0.8118],

[0.6841, 0.6846, 0.6842, ..., 0.8118, 0.8118, 0.8118],

...,

[0.5531, 0.5530, 0.5468, ..., 0.7612, 0.7608, 0.7608],

[0.5524, 0.5501, 0.5457, ..., 0.7608, 0.7608, 0.7608],

[0.5553, 0.5527, 0.5483, ..., 0.7619, 0.7616, 0.7612]],

[[0.6503, 0.6508, 0.6510, ..., 0.7822, 0.7825, 0.7822],

[0.6510, 0.6510, 0.6510, ..., 0.7843, 0.7843, 0.7843],

[0.6528, 0.6532, 0.6537, ..., 0.7843, 0.7843, 0.7843],

...,

[0.5178, 0.5177, 0.5115, ..., 0.7337, 0.7333, 0.7333],

[0.5171, 0.5148, 0.5104, ..., 0.7333, 0.7333, 0.7333],

[0.5200, 0.5174, 0.5130, ..., 0.7345, 0.7341, 0.7338]],

[[0.6072, 0.6076, 0.6082, ..., 0.7430, 0.7433, 0.7430],

[0.6078, 0.6078, 0.6122, ..., 0.7451, 0.7451, 0.7451],

[0.6096, 0.6101, 0.6136, ..., 0.7451, 0.7451, 0.7451],

...,

[0.4825, 0.4824, 0.4762, ..., 0.7023, 0.7020, 0.7020],

[0.4818, 0.4795, 0.4751, ..., 0.7020, 0.7020, 0.7020],

[0.4847, 0.4821, 0.4777, ..., 0.7031, 0.7027, 0.7024]]]]),

tensor([24, 43, 12, 13, 29, 27, 26, 29, 38, 5, 2, 17, 30, 13, 16, 40, 30, 17,

21, 24, 19, 5, 24, 27, 4, 32, 25, 34, 7, 17, 0, 14, 35, 43, 39, 40,

7, 34, 12, 32, 34, 13, 19, 16, 1, 4, 2, 33, 40, 5, 17, 32, 17, 36,

23, 26, 41, 25, 43, 12, 33, 39, 3, 31]))

Our model

- That’s the underlying model that’s going to train on our dataset.

- Layer 0 is the piece the contributes to transfer learning and is essentially resnet.

data.c

44

learner.model[0]

Sequential(

(0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(5): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(6): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(7): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

)

learner.summary()

Sequential

======================================================================

Layer (type) Output Shape Param # Trainable

======================================================================

Conv2d [64, 150, 150] 9,408 False

______________________________________________________________________

BatchNorm2d [64, 150, 150] 128 True

______________________________________________________________________

ReLU [64, 150, 150] 0 False

______________________________________________________________________

MaxPool2d [64, 75, 75] 0 False

______________________________________________________________________

Conv2d [64, 75, 75] 4,096 False

______________________________________________________________________

BatchNorm2d [64, 75, 75] 128 True

______________________________________________________________________

Conv2d [64, 75, 75] 36,864 False

______________________________________________________________________

BatchNorm2d [64, 75, 75] 128 True

______________________________________________________________________

Conv2d [256, 75, 75] 16,384 False

______________________________________________________________________

BatchNorm2d [256, 75, 75] 512 True

______________________________________________________________________

ReLU [256, 75, 75] 0 False

______________________________________________________________________

Conv2d [256, 75, 75] 16,384 False

______________________________________________________________________

BatchNorm2d [256, 75, 75] 512 True

______________________________________________________________________

Conv2d [64, 75, 75] 16,384 False

______________________________________________________________________

BatchNorm2d [64, 75, 75] 128 True

______________________________________________________________________

Conv2d [64, 75, 75] 36,864 False

______________________________________________________________________

BatchNorm2d [64, 75, 75] 128 True

______________________________________________________________________

Conv2d [256, 75, 75] 16,384 False

______________________________________________________________________

BatchNorm2d [256, 75, 75] 512 True

______________________________________________________________________

ReLU [256, 75, 75] 0 False

______________________________________________________________________

Conv2d [64, 75, 75] 16,384 False

______________________________________________________________________

BatchNorm2d [64, 75, 75] 128 True

______________________________________________________________________

Conv2d [64, 75, 75] 36,864 False

______________________________________________________________________

BatchNorm2d [64, 75, 75] 128 True

______________________________________________________________________

Conv2d [256, 75, 75] 16,384 False

______________________________________________________________________

BatchNorm2d [256, 75, 75] 512 True

______________________________________________________________________

ReLU [256, 75, 75] 0 False

______________________________________________________________________

Conv2d [128, 75, 75] 32,768 False

______________________________________________________________________

BatchNorm2d [128, 75, 75] 256 True

______________________________________________________________________

Conv2d [128, 38, 38] 147,456 False

______________________________________________________________________

BatchNorm2d [128, 38, 38] 256 True

______________________________________________________________________

Conv2d [512, 38, 38] 65,536 False

______________________________________________________________________

BatchNorm2d [512, 38, 38] 1,024 True

______________________________________________________________________

ReLU [512, 38, 38] 0 False

______________________________________________________________________

Conv2d [512, 38, 38] 131,072 False

______________________________________________________________________

BatchNorm2d [512, 38, 38] 1,024 True

______________________________________________________________________

Conv2d [128, 38, 38] 65,536 False

______________________________________________________________________

BatchNorm2d [128, 38, 38] 256 True

______________________________________________________________________

Conv2d [128, 38, 38] 147,456 False

______________________________________________________________________

BatchNorm2d [128, 38, 38] 256 True

______________________________________________________________________

Conv2d [512, 38, 38] 65,536 False

______________________________________________________________________

BatchNorm2d [512, 38, 38] 1,024 True

______________________________________________________________________

ReLU [512, 38, 38] 0 False

______________________________________________________________________

Conv2d [128, 38, 38] 65,536 False

______________________________________________________________________

BatchNorm2d [128, 38, 38] 256 True

______________________________________________________________________

Conv2d [128, 38, 38] 147,456 False

______________________________________________________________________

BatchNorm2d [128, 38, 38] 256 True

______________________________________________________________________

Conv2d [512, 38, 38] 65,536 False

______________________________________________________________________

BatchNorm2d [512, 38, 38] 1,024 True

______________________________________________________________________

ReLU [512, 38, 38] 0 False

______________________________________________________________________

Conv2d [128, 38, 38] 65,536 False

______________________________________________________________________

BatchNorm2d [128, 38, 38] 256 True

______________________________________________________________________

Conv2d [128, 38, 38] 147,456 False

______________________________________________________________________

BatchNorm2d [128, 38, 38] 256 True

______________________________________________________________________

Conv2d [512, 38, 38] 65,536 False

______________________________________________________________________

BatchNorm2d [512, 38, 38] 1,024 True

______________________________________________________________________

ReLU [512, 38, 38] 0 False

______________________________________________________________________

Conv2d [256, 38, 38] 131,072 False

______________________________________________________________________

BatchNorm2d [256, 38, 38] 512 True

______________________________________________________________________

Conv2d [256, 19, 19] 589,824 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [1024, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [1024, 19, 19] 2,048 True

______________________________________________________________________

ReLU [1024, 19, 19] 0 False

______________________________________________________________________

Conv2d [1024, 19, 19] 524,288 False

______________________________________________________________________

BatchNorm2d [1024, 19, 19] 2,048 True

______________________________________________________________________

Conv2d [256, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [256, 19, 19] 589,824 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [1024, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [1024, 19, 19] 2,048 True

______________________________________________________________________

ReLU [1024, 19, 19] 0 False

______________________________________________________________________

Conv2d [256, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [256, 19, 19] 589,824 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [1024, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [1024, 19, 19] 2,048 True

______________________________________________________________________

ReLU [1024, 19, 19] 0 False

______________________________________________________________________

Conv2d [256, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [256, 19, 19] 589,824 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [1024, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [1024, 19, 19] 2,048 True

______________________________________________________________________

ReLU [1024, 19, 19] 0 False

______________________________________________________________________

Conv2d [256, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [256, 19, 19] 589,824 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [1024, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [1024, 19, 19] 2,048 True

______________________________________________________________________

ReLU [1024, 19, 19] 0 False

______________________________________________________________________

Conv2d [256, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [256, 19, 19] 589,824 False

______________________________________________________________________

BatchNorm2d [256, 19, 19] 512 True

______________________________________________________________________

Conv2d [1024, 19, 19] 262,144 False

______________________________________________________________________

BatchNorm2d [1024, 19, 19] 2,048 True

______________________________________________________________________

ReLU [1024, 19, 19] 0 False

______________________________________________________________________

Conv2d [512, 19, 19] 524,288 False

______________________________________________________________________

BatchNorm2d [512, 19, 19] 1,024 True

______________________________________________________________________

Conv2d [512, 10, 10] 2,359,296 False

______________________________________________________________________

BatchNorm2d [512, 10, 10] 1,024 True

______________________________________________________________________

Conv2d [2048, 10, 10] 1,048,576 False

______________________________________________________________________

BatchNorm2d [2048, 10, 10] 4,096 True

______________________________________________________________________

ReLU [2048, 10, 10] 0 False

______________________________________________________________________

Conv2d [2048, 10, 10] 2,097,152 False

______________________________________________________________________

BatchNorm2d [2048, 10, 10] 4,096 True

______________________________________________________________________

Conv2d [512, 10, 10] 1,048,576 False

______________________________________________________________________

BatchNorm2d [512, 10, 10] 1,024 True

______________________________________________________________________

Conv2d [512, 10, 10] 2,359,296 False

______________________________________________________________________

BatchNorm2d [512, 10, 10] 1,024 True

______________________________________________________________________

Conv2d [2048, 10, 10] 1,048,576 False

______________________________________________________________________

BatchNorm2d [2048, 10, 10] 4,096 True

______________________________________________________________________

ReLU [2048, 10, 10] 0 False

______________________________________________________________________

Conv2d [512, 10, 10] 1,048,576 False

______________________________________________________________________

BatchNorm2d [512, 10, 10] 1,024 True

______________________________________________________________________

Conv2d [512, 10, 10] 2,359,296 False

______________________________________________________________________

BatchNorm2d [512, 10, 10] 1,024 True

______________________________________________________________________

Conv2d [2048, 10, 10] 1,048,576 False

______________________________________________________________________

BatchNorm2d [2048, 10, 10] 4,096 True

______________________________________________________________________

ReLU [2048, 10, 10] 0 False

______________________________________________________________________

AdaptiveAvgPool2d [2048, 1, 1] 0 False

______________________________________________________________________

AdaptiveMaxPool2d [2048, 1, 1] 0 False

______________________________________________________________________

Flatten [4096] 0 False

______________________________________________________________________

BatchNorm1d [4096] 8,192 True

______________________________________________________________________

Dropout [4096] 0 False

______________________________________________________________________

Linear [512] 2,097,664 True

______________________________________________________________________

ReLU [512] 0 False

______________________________________________________________________

BatchNorm1d [512] 1,024 True

______________________________________________________________________

Dropout [512] 0 False

______________________________________________________________________

Linear [44] 22,572 True

______________________________________________________________________

Total params: 25,637,484

Total trainable params: 2,182,572

Total non-trainable params: 23,454,912

Optimized with 'torch.optim.adam.Adam', betas=(0.9, 0.99)

Using true weight decay as discussed in https://www.fast.ai/2018/07/02/adam-weight-decay/

Loss function : FlattenedLoss

======================================================================

Callbacks functions applied

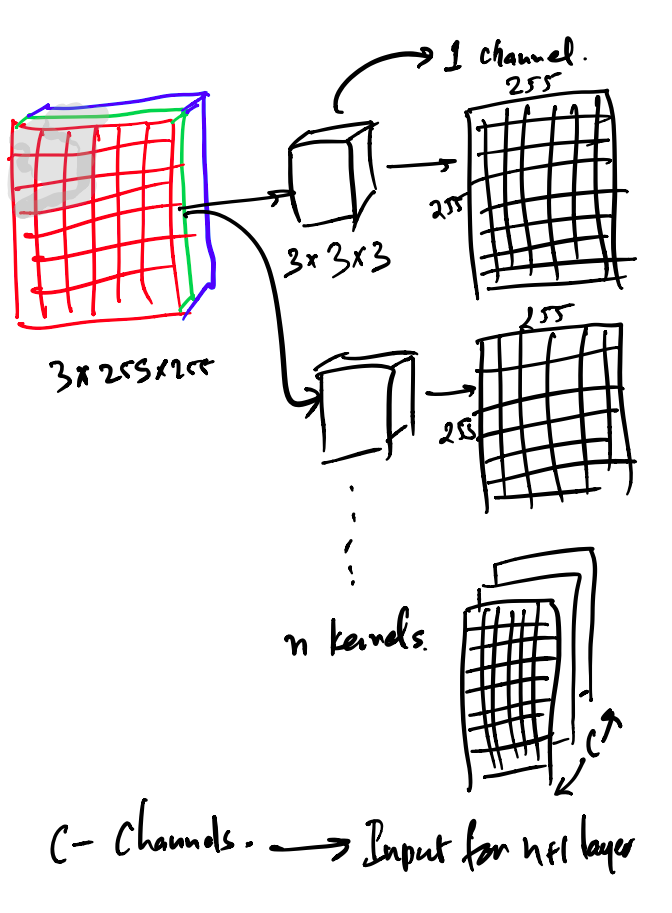

- The first layer here is

Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

documentation

Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros') :: _ConvNd

The 3 here indicates 3 channels of input.

- We had an image represented by a $3x225x225$ matrix. Since it’s a color image the number of channels of input are 3. If this was a grayscale image we’d have a single channel. An input can be imagined as a matrix of numbers.

- $64$ represents the number of output channels. We’ll see how we arrive at this.

kernel_sizeis again a $7x7$. We’ll see what a kernel is.strideandpaddingagain are matrices of $2x2$ and $3x3$ respectively.

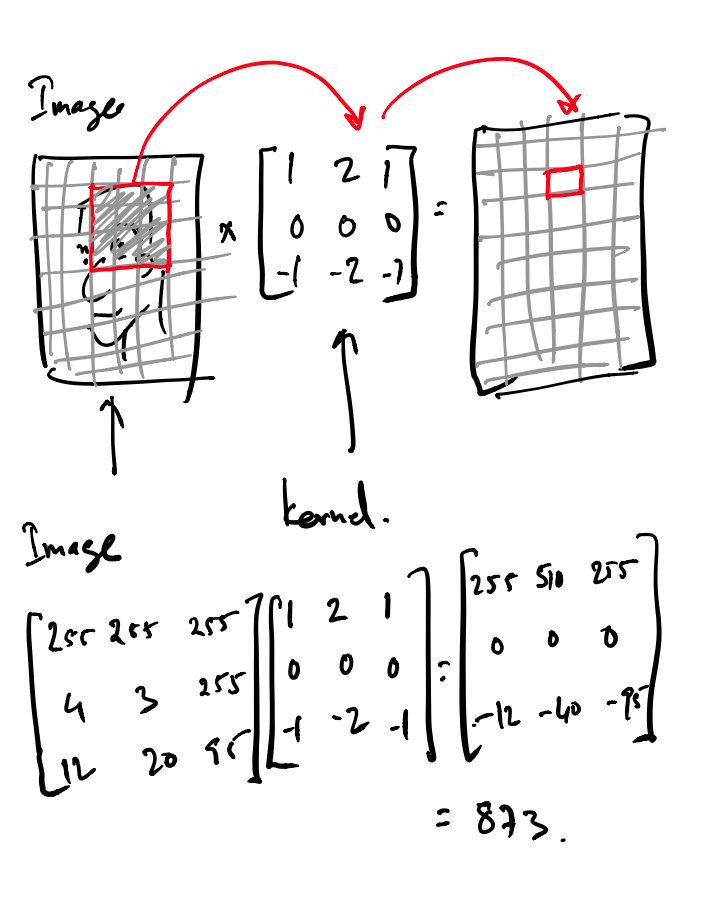

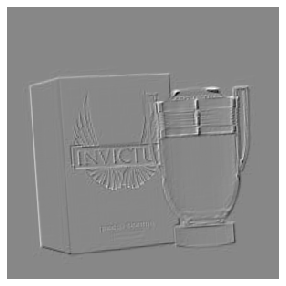

The Kernel

The Kernel is simply a matrix of a specific size. In this case $7x7$. It contains a bunch of numbers that when multiplied with the input matrix yields a specific feature. The numbers in the kernel are referred to as weights and they’re multiplied element wise with the input matrix. This is not a standard matrix multiplication.

Probably the most powerful demonstration of this is by Victor Powell here

The kernel is multipled with a $7x7$ part of the image to yield a single value. Essentially multiply and add all the elements of the element wise multiplication.

The weights of the kernels are used to accentuate specific attributes. For example colors, shapes, angles. This gets broader with time so in later layers the kernels try to identify attributes such as eye balls, hair etc.

In our example images we assume the kernel is $3x3x3$ to make it easier to visualise.

Now for a color image we’d have a $3x7x7$ kernel which gives us a single channel (again think of this as a matrix) which is $225x225$

The trick is to imagine each kernel to be focusing on one specific feature of the image. Which naturally demands more kernels to focus on multiple attributes of the image. Hence the number of kernels in each layer is usually 64, 256, 512 etc.

Each kernel produces a channel. At the end of each convolution layer we end up with multiple channels. Which serves as the input to the next layer

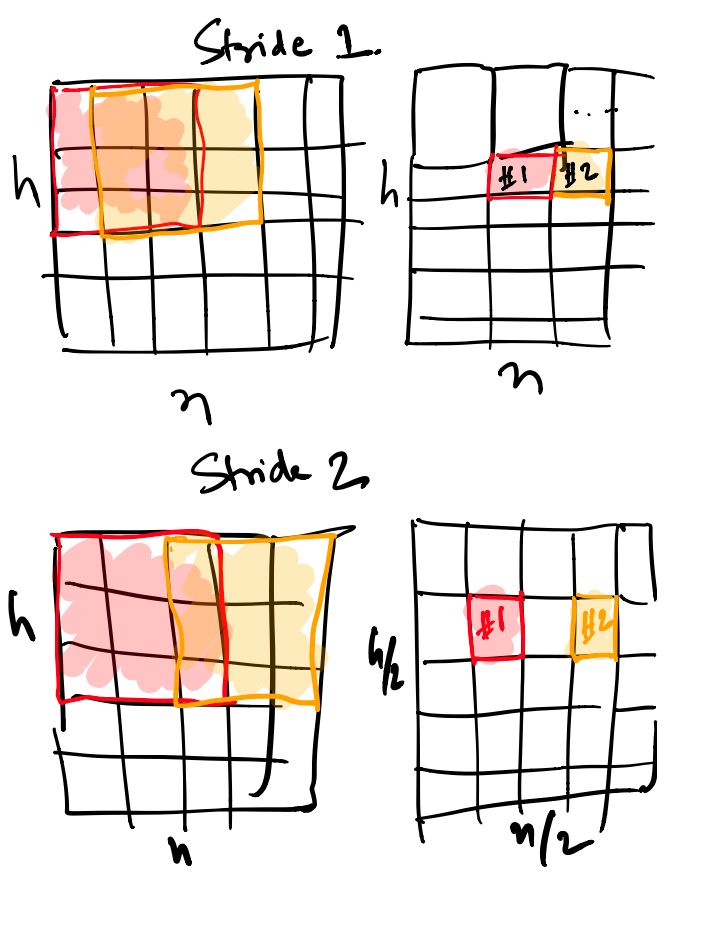

Stride

Stride as seen in the model description is the jump from one element in the input matrix to the next. So if the stride is 2 the kernel jumps 2 steps over the input matrix instead of one.

Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

The output size reduces

The results in the output having half the height and width of the original input. Hence as the channel grows, the output reduces to half the original shape. Note the fall from 150 to 75.

To compensate for reducing the size, which essentially means dropping information, the number of kernels are doubled.

======================================================================

Layer (type) Output Shape Param # Trainable

======================================================================

Conv2d [64, 150, 150] 9,408 False

______________________________________________________________________

BatchNorm2d [64, 150, 150] 128 True

______________________________________________________________________

ReLU [64, 150, 150] 0 False

______________________________________________________________________

MaxPool2d [64, 75, 75] 0 False

______________________________________________________________________

Conv2d [64, 75, 75] 4,096 False

______________________________________________________________________

BatchNorm2d [64, 75, 75] 128 True

______________________________________________________________________

Conv2d [64, 75, 75] 36,864 False

______________________________________________________________________

BatchNorm2d [64, 75, 75] 128 True

______________________________________________________________________

Conv2d [256, 75, 75] 16,384 False

______________________________________________________________________

BatchNorm2d [256, 75, 75] 512 True

As the stride grows the output decreases by the same factor.

Creating a $3x3x3$ kernel with a additional axis. The additional axis indicates there can be n kernels This of the axis indicating an array of $3x3x3$ kernels

sample_kernel = tensor([[0, -5/3, 1],

[-5/3, -5/3, 1],

[1, 1, 1]

]).expand(1, 3, 3, 3)/6

sample_kernel.shape

torch.Size([1, 3, 3, 3])

# The same image we used above

sample_image = data.train_ds[15][0]; sample_image

t = sample_image.data; t.shape

torch.Size([3, 300, 300])

We convert the image data (tensor) in t into an array by adding another axis.

t[None].shape

torch.Size([1, 3, 300, 300])

e = F.conv2d(t[None], sample_kernel)

show_image(e[0], figsize=(5,5))

<matplotlib.axes._subplots.AxesSubplot at 0x7fe30090a810>

We discovered the edges of the image with the kernel we used.

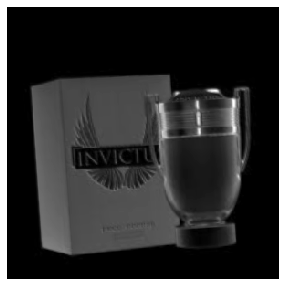

# With a different set of weights

ample_kernel = tensor([[12., 2., 1.],

[2., 2., 1.],

[1., 1., 1.]

]).expand(1, 3, 3, 3)/6

e2 = F.conv2d(t[None], ample_kernel)

show_image(e2[0], figsize=(5,5))

<matplotlib.axes._subplots.AxesSubplot at 0x7fe3008af310>

learner.model

Sequential(

(0): Sequential(

(0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(5): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(6): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

(7): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

)

)

(1): Sequential(

(0): AdaptiveConcatPool2d(

(ap): AdaptiveAvgPool2d(output_size=1)

(mp): AdaptiveMaxPool2d(output_size=1)

)

(1): Flatten()

(2): BatchNorm1d(4096, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(3): Dropout(p=0.25, inplace=False)

(4): Linear(in_features=4096, out_features=512, bias=True)

(5): ReLU(inplace=True)

(6): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): Dropout(p=0.5, inplace=False)

(8): Linear(in_features=512, out_features=44, bias=True)

)

)

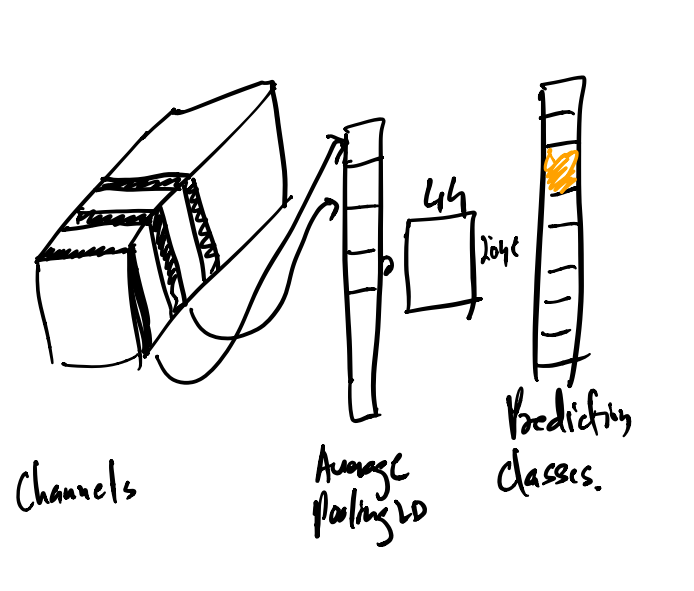

Classification (AveragePooling2d)

When we use the prediction on the learner as learner.predict which returns the Category of the input image.

This is a high value for the image for one of the 44 classes we fed the system. So assuming our channels at the end of our model we were left with 2048 channels.

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

This needs to be reduced into a single list with a high value for the category our input qualifies as.

So what we do is fetch the average of each channel output as a single value and store it in a tensor. Imagine this as a 2048x1 array or matrix. So each channel from our earlier discussion gets reduced by computing the average of each of the channels. This is average pooling.

We then multiply it with a matrix which accentuates the score of each of our 44 categories to yield the right one.